Genshin Shaders

The first part of our project was to create custom shaders for Genshin Impact, and we broke down this task into multiple parts:

- Injecting Shaders into Genshin Impact

- Creating the Kuwahara Filters

- Creating the Noir Shaders

- Creating the Cel Shaders

Injecting Shaders into Genshin Impact

In order to do this, we first needed to find a way to inject shaders into the game. Shaders are part of the rendering pipeline that most games do not expose to the end user, as game developers generally do not want the end user to make any modifications to their game. Such modifications are protected through the use of Anti-Cheat systems. Genshin Impact has an anti-cheat built in, and we found a way to bypass the anti-cheat and inject our own shaders.

We were able to find the Genshin-Impact-ReShade repository on GitHub that accomplished this exact task, and upon installation onto a Windows machine, we were able to inject the built-in shaders.

These shaders are written using ReShade FX, which is a shading language and compiler whose syntax is very closely related to HLSL.

To avoid getting Levy's actual Genshin Impact account from being banned, Levy created a new account for this project.

Creating the Kuwahara Filters

The first shader that we tackled was the Kuwahara Filter. The Kuwahara filter creates a painting-like flattening effect along the local feature directions, while preserving shape boundaries. This approach preserves edges, and we chose this approach over bilateral filters and mean shift filters due to its effectiveness over high and low contrast images. We created three different versions of the Kuwahara Filter: one based on square quadrants, one based on circular kernels, and one based on anisotropic kernels.

Kuwahara Filter Based on Square Quadrants

To create the Kuwahara filter based on square quadrants, we create a square filter that has four square quadrants that are each radius by radius large and overlap by one pixel, which is the current pixel that is being processed.

$$ \begin{aligned} & W_0=\left[x_0-r,\ x_0\right] \times\left[y_0,\ y_0+r\right], \\ & W_1=\left[x_0,\ x_0+r\right] \times\left[y_0,\ y_0+r\right], \\ & W_2=\left[x_0,\ x_0+r\right] \times\left[y_0-r,\ y_0\right], \\ & W_3=\left[x_0-r,\ x_0\right] \times\left[y_0-r,\ y_0\right]. \end{aligned} $$First, we calculate the following:

$$ |W_k|=\left(r+1\right)^2 $$|W_k| is equivalent to the number of pixels in the quadrant we are computing, with r defined as radius.

Then, using the previous definition, we calculate the mean, m_k as the following:

$$ \begin{aligned} &m_k=\frac{1}{\left|W_k\right|} \sum_{(x, y) \in W_k} f(x, y).\\ \end{aligned} $$We calculate the variance as the average of the square of the distance of each pixel to the mean, as the following:

$$ \begin{aligned} s_k^2 &=\frac{1}{\left|W_k\right|} \sum_{(x, y) \in W_k}\left(f(x, y)-m_k\right)^2 \\ &=\frac{1}{\left|W_k\right|} \sum_{(x, y) \in W_k} f^2(x, y)-m_k. \end{aligned} $$In this model, we assume that the variances for each channel color do not correlate with each other, so this means that the final variance for W_k is going to be the following:

$$\sigma_k^2=s_{k, r}^2+s_{k, g}^2+s_{k, b}^2.$$Now, the output value for our pixel at $(x_0, y_0)$ is goint to be:

$$ F(x_0, y_0) := m_i, \quad\quad i=\text{argmin}_k \sigma_k. $$Here is the source code for our implementation of the Kuwahara filter based on square quadrants.

Here is a comparison between the base shaders with the square kuwahara shader.

Kuwahara Filter Based on Circular Kernels

Next, we created the Kuwahara filter based on circular kernels. We first created different sectors of the circular filter that we were going to implement at a time, and this is adjacent to the idea of the square quadrants of the Kuwahara filter that uses the square quadrants. First, we calculate sigma_r and sigma_s to be the following, where the circular kernel's radius, K_size, is defined to be 32:

$$ \begin{aligned} \sigma_r & =\frac{1}{2} \cdot \frac{K_{\text {size }}-1}{2}=7.75, \\ \sigma_s & =0.33 \cdot \sigma_r, \end{aligned} $$Then, we can calculate gaussians using standard deviations with $\sigma_r$ and $\sigma_s$ using the following formula:

$$ G_\sigma(x, y)=\frac{1}{2 \pi \sigma^2} \exp \left(-\frac{x^2+y^2}{2 \sigma^2}\right) $$Next, we can define our smooth weighting function $w_k$ using the following formula:

$$ w_k=\left(\chi_k \star G_{\sigma_s}\right) \cdot G_{\sigma_r} $$Here, the x_k value is convolved with the gaussian that uses standard deviation of sigma_s, then the result is multiplied by $\sigma_r$.

Now, the weighted mean $m_k$ at a point $(x_0, y_0)$ is defined as the following:

$$ m_k=\sum_{(x, y) \in \mathbb{Z}^2} f(x, y) \ w_k\left(x-x_0,\ y-y_0\right) $$The convolution smooths the characteristic function such that the characteristic functions slightly overlap with one another. Also, notice how the sum of $w_k$ is equivalent to a Gaussian filter, so this is why the mean m_k is calculated above.

Now, the weighted variance is defined as the following:

$$ \begin{aligned} s_k^2 &=\sum_{(x, y) \in \mathbb{Z}^2}\left(f(x, y)-m_k\right)^2 \ w_k\left(x-x_0,\ y-y_0\right) \\ &=\sum_{(x, y) \in \mathbb{Z}^2} f^2(x, y) \ w_k\left(x-x_0,\ y-y_0\right)-m_k. \end{aligned} $$The output of our filter is a weighted average of the means with the variances. To refine our weighted average, we define $\alpha_k$ to be the following:

$$ \alpha_k=\frac{1}{1+\left(255 \cdot \sigma_k^2\right)^{q / 2}}. $$The final output for a pixel $(x_0, y_0)$ is the following:

$$ F\left(x_0, y_0\right):=\frac{\sum_k \alpha_k m_k}{\sum_k \alpha_k} . $$To implement this, w_k is actually fairly hard to compute, since computing it requires convolutions. Instead of computing w_k for every pixel, one at a time, we create a texture map that we can use to sample w_k that uses bilinear interpolation. Furthermore, since each sector of a circle is equivalent to one sector, rotated multiple times, so the w_k value will be calculated with the following function:

$$ w_k(x, y)=K_0\left(\left(\begin{array}{l} 0.5 \\ 0.5 \end{array}\right)+\frac{R_{-2 \pi k / N}(x, y)}{2 r}\right) $$To sample a texture to get w_k, we created a compute shader dynamically using gaussian values that we generate on the fly. We call this texture K0.

Here is the source code for our implementation of the Kuwahara filter based on square quadrants.

Here is a comparison between the base shaders with the circular kuwahara shader.

Kuwahara Filter Based on Anisotropic Kernels

Finally, we implemented the hardest version of the Kuwahara filter, which is the Kuwahara filter with anisotropic kernels. In comparison to the kuwahara filter with circular kernels, the Kuwahara filter with anisotropic kernels changes the shape of the circle so that the circle can stretch and shrink in different directions so that the resulting ellipse's principal direction matches with the direction of the image's features. For instance, the pixel that we are sampling is at an edge, the circle will become an ellipse so that the principle direction of the ellipse will be along the edge.

This filter is by far the hardest to implement.

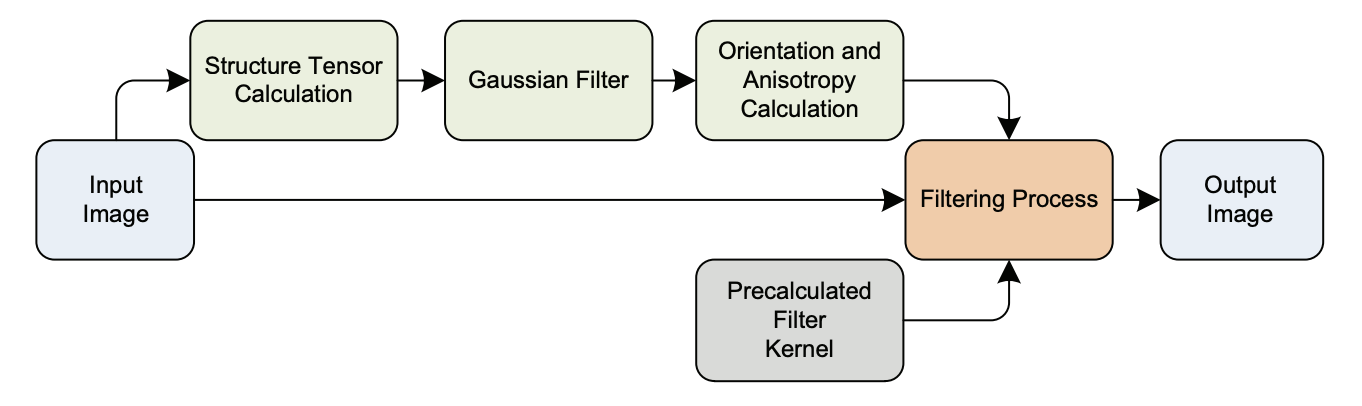

To accomplish this, we process the image at each pixel into multiple stages, as follows:

This means that we first calculate the structure tensor, then compute the gaussian filter and orientation/anisotropy, and finally filter and return the result.

The first step is to calculate the structure tensor from the RGB values of the input. If we let f be the input image, then S_x and S_y are the horizontal and vertical convolution masks of the Sobel edge detection filter:

$$ S_x=\frac{1}{4}\left(\begin{array}{rrr} +1 & 0 & -1 \\ +2 & 0 & -2 \\ +1 & 0 & -1 \end{array}\right) \quad \text { and } \quad S_y=\frac{1}{4}\left(\begin{array}{rrr} +1 & +2 & +1 \\ 0 & 0 & 0 \\ -1 & -2 & -1 \end{array}\right) $$The approximations of the partial derivaties of $f$ are defined by the following: $f_x=S_x$ convolved with $f$ and $f_y= S_y$ convolved with $f$.

$$ f_x=(S_x \star f) \text { and } f_y=(S_y \star f), $$Now, the structural tensor of ${f}$ is defined as follows:

$$ \left(g_{i j}\right)=\left(\begin{array}{ll} f_x \cdot f_x & f_x \cdot f_y \\ f_x \cdot f_y & f_y \cdot f_y \end{array}\right)=:\left(\begin{array}{ll} E & F \\ F & G \end{array}\right) $$To get the structural tensor's eigenvalues and the eigenvector of the minimum rate of change, we do the following:

$$ \lambda_{1,2}=\frac{E+G \pm \sqrt{(E-G)^2+4 F^2}}{2}.\quad\quad t=\begin{pmatrix}\lambda_1-E\\-F\end{pmatrix}. $$Now, the angle by which the ellipse is rotated is the following:

$$ \varphi=\arg t. $$We calculate the measure of anisotropy to be the following, with $0$ being isotropic and 1 being entirely anisotropic.

$$ A=\frac{\lambda_1-\lambda_2}{\lambda_1+\lambda_2} $$The next step is to compute the gaussian filter and orientation/anisotropy.

Recall that the equation of the rotated ellipse is the following:

$$ \frac{(x \cos \varphi-y \sin \varphi)^2}{a^2}+\frac{(x \sin \varphi+y \cos \varphi)^2}{b^2}=1. $$Once we write this equation in standard form and plug in the values into the equation, we get the following:

$$ P(x, y)=A x^2+B y^2+C x+D y+E x y+F=0, \quad \quad \begin{aligned} & A=a^2 \sin ^2 \varphi+b^2 \cos ^2 \varphi, \\ & B=a^2 \cos ^2 \varphi+b^2 \sin ^2 \varphi \\ & C=0, \\ & D=0, \\ & E=2(a^2-b^2)\sin\varphi\cos\varphi, \\ & F=-a^2 b^2 . \end{aligned} $$We calculate the horizontal extrema where the partial derivative in the $y$ direction vanishes to be as follows:

$$ \frac{\partial P}{\partial y}=2 B y+E x=0 \quad \Leftrightarrow \quad y=\frac{-E x}{2 B} $$After we substitute this value of $y$ into the ellipse, we get the equation:

$$ \left(A-\frac{E^2}{4 B}\right) x^2+F=0 $$This ellipse's horizontal and vertical extrema are as follows:

$$ x= \pm \sqrt{\frac{F}{\frac{E^2}{4 B}-A}}= \pm \sqrt{a^2 \cos ^2 \varphi+b^2 \sin ^2 \varphi} . \quad \quad y= \pm \sqrt{a^2 \sin ^2 \varphi+b^2 \cos ^2 \varphi} $$If we want to adjust the ellipse's eccentricity, or how flat/round the ellipse's shape is, we can introduce a new variable called a. With a very large a value, the $a$ and $b$ axes converge to 1 , and with $\alpha=1$, we have a maximum eccentricity of 4 . The values a and $b$ are governed by this formula:

$$a=\frac{\alpha+A}{\alpha} r \quad \text{ and } \quad b=\frac{\alpha}{\alpha+A} r$$Now, to get the value $w_k(x, y)$, we sample from the texture $K_0$ that is defined in the above section using circular filters to get the following:

$$ w_k(x, y)=K_0\left(\left(\begin{array}{c} 0.5 \\ 0.5 \end{array}\right)+R_{-2 \pi k / N} S R_{-\varphi}(x, y)\right). $$Note that in order to calculate $w_k$, we need the formula for $S$, which is:

$$ S=\left(\begin{array}{cc} \frac{1}{2 a} & 0 \\ 0 & \frac{1}{2 b} \end{array}\right), $$Also note that $S$ * $R$ matrix maps points from the ellipse to a circle of radius $0.5$.

We then apply the same logic as in the circular filter to compute the result of the filter.

Here is the source code for our implementation of the Kuwahara filter based on square quadrants.

Creating the Noir Shaders

In order to compute the noir shaders, we first need to determine whether a pixel is along an edge. We do this by implementing the sobel filter, and if the value output by the sobel filter is greater than a threshold, we can say that the pixel is along an edge. We compute that as follows:

$$ \mathbf{G}_x=\left[\begin{array}{ccc} +1 & 0 & -1 \\ +2 & 0 & -2 \\ +1 & 0 & -1 \end{array}\right] * \mathbf{A} \quad \text { and } \quad \mathbf{G}_y=\left[\begin{array}{ccc} +1 & +2 & +1 \\ 0 & 0 & 0 \\ -1 & -2 & -1 \end{array}\right] * \mathbf{A} $$Here, note that the $\mathbf G_x$ and $\mathbf G_y$ are the horizontal and vertical derivative approximations, and $\mathbf A$ is the source image. The two matrices are each convolved with the source image $\mathbf A$.

Using these approximations, we calculate the gradient as follows:

$$ \mathbf{G}=\sqrt{\mathbf{G}_x^2+\mathbf{G}_y^2} $$If the gradient is greater than a variable called EdgeSlope which we have set to $0.23$, we consider this pixel to be along an edge.

For pixels along an edge, we draw a white pixel and set the opacity value to be very high.

The pencil shaders work to re-create an effect similar to that which one may see in a shaded yet mostly black-and-white Manga comic book. The pencil shaders work by first determining whether a pixel is along an edge. We do this by implementing the sobel filter, and if the value output by the sobel filter is greater than a threshold, we can say that the pixel is along an edge. Then we draw a white pixel and set the opacity value to be very high. Otherwise, we color in the pixel value with a pencil texture, based on the average color channel's intensity value, $0.33 ^* (r+g+b)$. Finally, we add a noise texture to the image to make it look more like a pencil drawing.

Otherwise, we color in the pixel value with a pencil texture, based on the average color channel's intensity value, 0.33 * (r + g + b).

The pencil texture is sampled as weights to the color-banded flattening of colors via floor division, in addition to using the linearized depth buffer information to grab edges based upon different z values.

This creates the similar effects as a pencil drawing.

Here is a comparison between the base shaders with the noir pencil shader.

Creating the Cel Shaders

The Cel/Toon shader is a in essence, another shader which uses floor division to flatten the colors to create discrete transition effect, in addition to creating a bold outlining to hammer in the cartoon effect. To accurately pinpoint the edges, the Z-buffer is sampled to get the relative depth difference from the adjacent pixels to grab the slope of the edge in the spirit of the sobel filter. However, since the game is already cartoon-ish, the effects are not the most apparent.

Here is a comparison between the base shaders with the cel shader.

Problems Encountered and How We Tackled Them

We encountered a lot of problems in building the shader programs. At first, we did not know about the syntax and how the shaders errored, since we did not have any information to debug with. Over time, we learned more about the HLSL-like FX language and were able to start debugging our shader implementation through the console. We also had some design decisions to make for the Noir Shader, since drawing a mixture of black and white borders did not achieve as good as a visual effect as drawing only white borders. There was also an issue that we encountered with the depth buffer, since the foreground and the background are located in different depths. We resolved this by experimenting with only RGB values and only the depth buffer, and we ended up using a combination of both RGB buffers and the depth buffer.

Lessons Learned

Upon completion of this project, we learned how to write ReshadeFX code and learned how to create shaders using a different rendering engine. We also learned a lot more about the different features of writing shader code, including using different techniques, and creating custom textures.